I’m dashing off a quick one of these this afternoon to comment on a story that’s going viral. This ticks every box for me, so here I am.

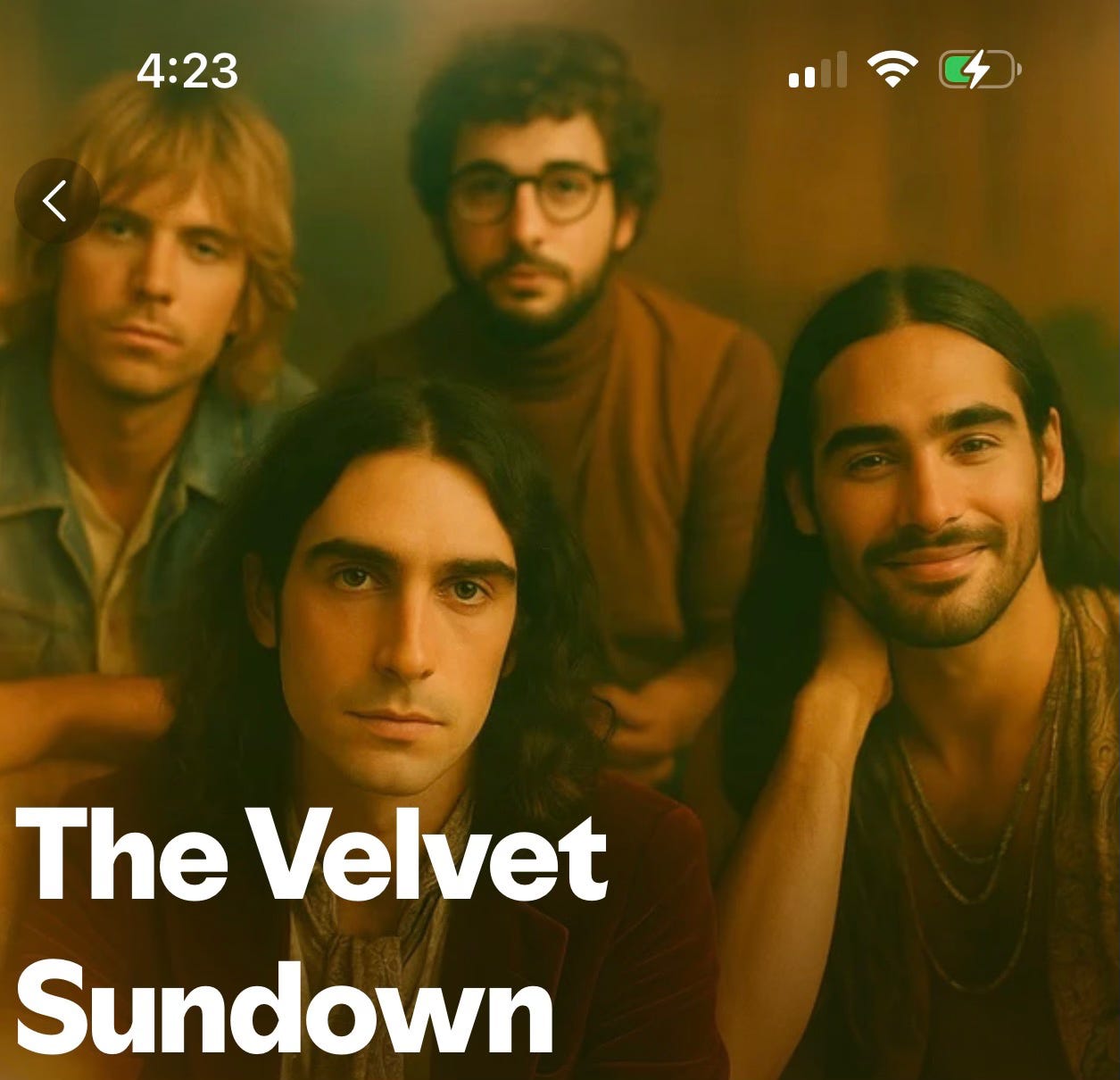

Yesterday morning I saw a Facebook post from an agent friend of mine about a band on streaming platforms called The Velvet Sundown. Here’s the article. The TLDR is they are allegedly a 100% AI creation, from the music to the bio to the hilariously obviously fake band photos.

The story broke via a couple Reddit threads making the point that this AI slop has over 300K monthly listeners on Spotify, where the music appears on multiple curated playlists. The outrage is mostly about Spotify prioritizing AI slop over “real” artists, tipping their hand to a larger goal of eliminating human music altogether.

I feel like I might be sliding into deep cynicism because my first thought was this is music marketing. I texted a few of the best music social marketing people I know to get their take. No names, but they all agreed that this AI-created band might be an industry plant.

The outcome speaks for itself: a musical artist (whether human, part-human, or robot) has a viral moment that’s sending many thousands to their Spotify page to see what it’s all about. They’ve gained 100K monthly listeners (+/- 300K to +/- 400K) in 24 hours. The story broke on Reddit, where users can maintain their anonymity.

I have so many - too many - thoughts about this. First, and perhaps most importantly, The Velvet Sundown sucks. I mean, it is absolutely terrible on every level. I share the outrage over people uploading AI content and passing it off as human music. I consider that to be a form of streaming fraud that should be punished. That said, any DIY musician who feels that this music will compete with their own original music for actual fans should probably consider another career. This music would pass for background music; but nobody who cares about music could ever actively listen to this shit.

This viral moment should never have happened, because Spotify should already be alerting its listeners of AI-generated content. It’s damning that they don’t have such a policy already. It’s hard to avoid the conclusion that they somehow benefit from keeping their customers in the dark. This needs to change immediately, on all platforms. The Velvet Sundown sucks (louder for the people in the back), but superficially it sounds exactly like a human creation. We need labeling, because practically everyone I’ve talked to cares deeply about this issue. We deserve to know.

It’s alarming that Congress isn’t absolutely prioritizing a comprehensive AI bill in coordination with the rest of the world. It’s literally an existential threat. The risks are so enormous, and the upside of this technology is so unclear (with very clear and immediate downsides). The fact that we have to wonder whether it’s real or AI is a testament to the fast development of the technology, but it’s an indictment of the policies and priorities of government and big business. For more on the effort to label AI-generated music, visit my friends at AI:OK.

It’s my hope and expectation that the mediocrity of AI content, combined with the insult of platforms pushing it on us without notice, will align us against this bullshit. I believe the next phase of the entertainment business will prioritize human artistry above all else. HOWEVER, that shouldn’t let the tech industry off the hook in any way. As we train our sites on streaming platforms that push AI content, we need to keep our main focus on the tech platforms stealing our music.

I’m not convinced that godawful suck fest known as The Velvet Sundown is fully AI generated because I’m that cynical. But let’s assume it is. Who should get paid for this bad music that people are streaming either by accident or as rubberneckers like me? I dunno, definitely Pink Floyd, The Doors, Tame Impala, Led Zeppelin, and 100 other acts that are so popular every note they ever played has become a cliche when appropriated by a modern band. I have some thoughts about who should get paid. My thoughts:

Whether or not the music is 100% AI, I’m going to assume the lyrics (an incoherent war-obsessed string of endless cliches in simple rhyme schemes) are generated on a prompt to a large language model such as ChatGPT. I’m going to ignore the music publishing aka “songwriting” aspects and focus on the recording.

Whether or not it’s fair, we already have a structure for how tech platforms pay music rights holders. It’s the iTunes model - 70% to the rights holder and 30% to the house. Spotify has a similar split - supposedly they’re paying out 70% of gross to rights holders. I believe a generative platform trained on licensed music should also pay at least 70% of its gross earnings to the recording and publishing interests.

I’m not an expert on AI training, but I have a basic understanding of how unlicensed models such as Suno and Udio have been trained. There are two basic phases. The first is foundational model training, whereby the model learns generally how to compose music. This requires a massive dataset, hundreds of millions of tracks across many styles, genres, and eras of music. It’s very likely that anyone who has any music on the internet has tracks in these datasets. I am NOT of the opinion that everyone with music on the Internet should get paid for AI music.

The second phase of training is fine tuning. This is a much smaller set of (to quote my chatbot) “good or professionally-produced” music that includes chart hits, famous tracks, critically acclaimed albums, and in general music that has market value. Rightsholders with tracks in these datasets should be paid. This will require full transparency from the tech platforms.

Transparency should extend to all of the tracks used to train a set, including which tracks have been prioritized to make the models sound like high-quality, market-relevant, commercial music. It’s probably tens or hundreds of thousands of tracks, and these rights holders should be paid the majority of a generative platform’s gross revenue in acknowledgement that their product has no value without the training “data,” which we’ve referred to as art until the systematic devaluation from tech platforms that have coined anti-art/artist terms such as “content.”

I know, we need a lot more information, much smarter people than me to get this right. I’m admittedly an artist advocate, but I don’t think my positions are radical or unfair. I’ll work in another chance to say The Velvet Sundown completely sucks, but this sort of content will get better quickly. It already sounds like quality music, til you really listen. It’s going to eat up a lot of the music market, especially for “lean back” passive listening applications.

As a final thought, I have an idea for how someone could find out if TVS (fan lingo) is a real band. With the current administration’s removal of all AI guardrails this might change, but for the time being the Copyright office has opined that purely AI-generated works are not protectable under copyright. If someone were to blatantly steal TVS’s music and distribute it on Spotify (like, literally the same music), and the people behind TVS were to sue, they’d have to prove that it’s sufficiently human-created to qualify for copyright. That would come out in discovery. Hey, might be fun for someone who doesn’t mind getting sued by streaming fraudsters.

I’m compelled by the idea that, like in Liz Pelly’s book, where one of Substack’s business goals is to reduce COGS, this could easily be a test to see if, like the nameless/faceless commodotized chill wave music they apparently foist on so many listeners, this could be a COGS-less solution to incremental revenue. I mean, sure, you might want to listen to Thee Osees, or Pink Floyd, if you’re a human at home being a human, but what about dentist offices and CVS stores? I’m only sort of kidding

I think if TVS are real, if this is marketing, the smartest marketing move would be to unveil themselves and announce a tour within a week. We’ll see whether that happens. (To me it would be remarkable to come out and proudly claim to have made this music, but then again I think that about a lot of music.)

The question of why Spotify would do this seems pretty simple: They pay a couple bucks to the AI company for the song and never have to pay royalties again.

This is the music you eventually get to when you call up a song you want to hear and then let the algorithm roll. That’s where the listener numbers come from, I’m fairly confident.